#CSA

UNIT 4

UNIT-IV: Input /Output Organization: Peripheral Devices, I/O interfaces I/O-mapped 1/O and memory-mapped I/0, interrupts and interrupt handling mechanisms, vectored interrupts, synchronous vs. asynchronous data transfer, Direct Memory Access.

Text Books:

1. Computer System Architecture, M. Marris Mano, PHI 2. Computer Organization, VC Hamacher, ZGVranesicand S.C.Zaky, McGraw Hill

NOTES:

Methods of Data transfer:

1. Programmed I/O : In programmed I/O, the processor keeps on scanning whether any device is ready for data transfer. If an I/O device is ready, the processor fully dedicates itself in transferring the data between I/O and memory.

2. Interrupt driven I/O: In Interrupt driven I/O, whenever the device is ready for data transfer, then it raises an interrupt to processor

The above two modes of data transfer are not useful for transferring a large block of data. But, the DMA controller completes this task at a faster rate and is also effective for transfer of large data block.SO,

3. Direct memory access (DMA) : It is a another mode of data transfer between the memory and I/O devices.

RISC and CISC Processors

RISC stands for Reduced Instruction Set Computer and

CISC stands for Complex Instruction Set Computer.

| S.No. | RISC | CISC |

|---|---|---|

| 1. | Simple instruction set | Complex instruction set |

| 2. | Consists of Large number of registers. | Less number of registers |

| 3. | Larger Program | Smaller program |

| 4. | Simple processor circuitry (small number of transistors) | Complex processor circuitry (more number of transistors) |

| 5. | More RAM usage | Little Ram usage |

| 6. | Simple addressing modes | Variety of addressing modes |

| 7. | Fixed length instructions | Variable length instructions |

| 8. | Fixed number of clock cycles for executing one instruction | Variable number of clock cycles for each instructions |

QUESTIONS : COMPILED FROM UNIVERSITY Q P:

1. What is Direct Memory Access technique? Explain the rate of DMA controller with diagram.

Ans:

Direct Memory Access (DMA) transfers the block of data between the memory and peripheral devices of the system, without the participation of the processor. The unit that controls the activity of accessing memory directly is called a DMA controller.

- DMA is an abbreviation of direct memory access.

- DMA is a method of data transfer between main memory and peripheral devices.

- The hardware unit that controls the DMA transfer is a DMA controller.

- DMA controller transfers the data to and from memory without the participation of the processor.

- The processor provides the start address and the word count of the data block which is transferred to or from memory to the DMA controller and frees the bus for DMA controller to transfer the block of data.

- DMA controller transfers the data block at the faster rate as data is directly accessed by I/O devices and is not required to pass through the processor which save the clock cycles.

- DMA controller transfers the block of data to and from memory in three modes burst mode, cycle steal mode and transparent mode.

- DMA can be configured in various ways it can be a part of individual I/O devices, or all the peripherals attached to the system may share the same DMA controller.

Thus the DMA controller is a convenient mode of data transfer. It is preferred over the programmed I/O and Interrupt-driven I/O mode of data transfer.

- Whenever an I/O device wants to transfer the data to or from memory, it sends the DMA request (DRQ) to the DMA controller. DMA controller accepts this DRQ and asks the CPU to hold for a few clock cycles by sending it the Hold request (HLD).

- CPU receives the Hold request (HLD) from DMA controller and relinquishes the bus and sends the Hold acknowledgement (HLDA) to DMA controller.

- After receiving the Hold acknowledgement (HLDA), DMA controller acknowledges I/O device (DACK) that the data transfer can be performed and DMA controller takes the charge of the system bus and transfers the data to or from memory.

- When the data transfer is accomplished, the DMA raise an interrupt to let know the processor that the task of data transfer is finished and the processor can take control over the bus again and start processing where it has left.

2. Explain in detail about the structure of a Magnetic Disk system. Also mention how we can find its capacity.

A magnetic disk primarily consists of a rotating magnetic surface (called platter) and a mechanical arm that moves over it. Together, they form a “comb”.

The mechanical arm is used to read from and write to the disk. The data on a magnetic disk is read and written using a magnetization process.

A magnetic disk is a storage device that can be assumed as the shape of a Gramophone record. This disk is coated on both sides with a thin film of Magnetic material. This magnetic material has the property that it can store either ‘1’ or ‘0' permanently. The magnetic material has square loop hysteresis (curve) which can remain in one out of two possible directions which correspond to binary ‘1’ or ‘0’.

Bits are saved in the magnetized surface in marks along concentric circles known as tracks. The tracks are frequently divided into areas known as sectors.

In this system, the lowest quantity of data that can be sent is a sector.

A magnetic disk contains several platters. Each platter is divided into circular shaped tracks. The length of the tracks near the centre is less than the length of the tracks farther from the centre. Each track is further divided into sectors, as shown in the figure.

Tracks of the same distance from centre form a cylinder. A read-write head is used to read data from a sector of the magnetic disk.

The speed of the disk is measured as two parts:

- Transfer rate: This is the rate at which the data moves from disk to the computer.

- Random access time: It is the sum of the seek time and rotational latency.

Seek time is the time taken by the arm to move to the required track.

Rotational latency is defined as the time taken by the arm to reach the required sector in the track.

Magnetic Disk in Computer Architecture-

In computer architecture,

- Magnetic disk is a storage device that is used to write, rewrite and access data.

- It uses a magnetization process.

Architecture-

- The entire disk is divided into platters.

- Each platter consists of concentric circles called as tracks.

- These tracks are further divided into sectors which are the smallest divisions in the disk.

- A cylinder is formed by combining the tracks at a given radius of a disk pack.

- There exists a mechanical arm called as Read / Write head.

- It is used to read from and write to the disk.

- Head has to reach at a particular track and then wait for the rotation of the platter.

- The rotation causes the required sector of the track to come under the head.

- Each platter has 2 surfaces- top and bottom and both the surfaces are used to store the data.

- Each surface has its own read / write head.

- All the tracks of a disk have the same storage capacity.

- This is because each track has different storage density.

- Storage density decreases as we from one track to another track away from the center.

- Innermost track has maximum storage density.

- Outermost track has minimum storage density.

Storage Density-

Thus,

Capacity Of Disk Pack-

Capacity of a disk pack is calculated as-

| Capacity of a disk pack = Total number of surfaces x Number of tracks per surface x Number of sectors per track x Storage capacity of one sector |

3. What do you understand by computer peripherals? Explain with proper explanation any two computer peripherals.

Ans:

A peripheral device is a device that is connected to a computer system but is not part of the core computer system architecture. Generally, more people use the term peripheral more loosely to refer to a device external to the computer case.

Peripheral devices: It is generally classified into 3 basic categories which are given below:

1.Input Devices: The input devices is defined as it converts incoming data and instructions into a pattern of electrical signals in binary code that are comprehensible to a digital computer. Example:

Keyboard, mouse, scanner, microphone etc.

2. Output Devices: An output device is generally reverse of the input process and generally translating the digitized signals into a form intelligible to the user. The output device is also performed for sending data from one computer system to another. For some time punched-card and paper-tape readers were extensively used for input, but these have now been supplanted by more efficient devices. Example:

Monitors, headphones, printers etc.3. Storage Devices: Storage devices are used to store data in the system which is required for performing any operation in the system. The storage device is one of the most requirement devices and also provide better compatibility. Example:Hard disk, magnetic tape, Flash memory etc.

4. What is address space? Explain isolated w/s memory mapped I/O.

Ans:

In a microprocessor system, there are two methods of interfacing input/output (I/O) devices:

memory-mapped I/O and I/O mapped I/O

The key factor of differentiation between memory-mapped I/O and Isolated I/O is that in memory-mapped I/O, the same address space is used for both memory and I/O device. While in I/O-mapped I/O, separate address spaces are used for memory and I/O device.

Memory-mapped I/O:

Processor does not differentiate between memory and I/O. Treats I/O devices also like memory devices.

I/O addresses are as big as memory addresses. E.g.in 8085, I/O addresses will be 16 bit as memory addresses are also 16-bit.

This allows us to increase the number of I/O devices. E.g. in 8085, we can access up to 216 = 65536 I/O devices.

We need only two control signals in the system: Read and Write.

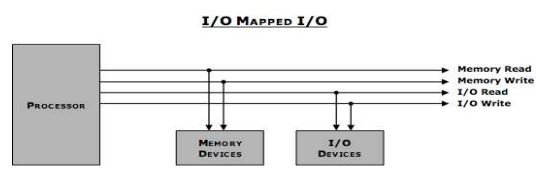

I/O mapped I/O:

Processor differentiates between I/O devices and memory. It isolates I/O devices.

I/O addresses are smaller than memory addresses. E.g. in 8085, I/O addresses will be 8 bit though memory addresses are 16-bit.

This allows us to access limited number of I/O devices. E.g. in 8085, we can access only up to 28 = 256 I/O devices.

We need four control signals: Memory Read, Memory Write and I/O Read and I/O Write

| Basis for Comparison | Memory mapped I/O | I/O mapped I/O |

|---|---|---|

| Basic | I/O devices are treated as memory. | I/O devices are treated as I/O devices. |

| Allotted address size | 16-bit (A0 – A15) | 8-bit (A0 – A7) |

| Data transfer instructions | Same for memory and I/O devices. | Different for memory and I/O devices. |

| Cycles involved | Memory read and memory write | I/O read and I/O write |

| Interfacing of I/O ports | Large (around 64K) | Comparatively small (around 256) |

| Control signal | No separate control signal is needed for I/O devices. | Special control signals are used for I/O devices. |

| Efficiency | Less | Comparatively more |

| Decoder hardware | More decoder hardware required. | Less decoder hardware required. |

| IO/M’ | During memory read or memory write operations, IO/M’ is kept low. | During I/O read and I/O write operation, IO/M’ is kept high. |

| Data movement | Between registers and ports. | Between accumulator and ports. |

| Logical approach | Simple | Complex |

| Usability | In small systems where memory requirement is less. | In systems that need large memory space. |

| Speed of operation | Slow | Comparatively fast |

| Example of instruction | LDA ****H STA ****H MOV A, M | IN ****H OUT ****H |

5. Define priority interrupt. Explain daisy chaining priority interrupt with a block diagram

OR

How Daisy Chaining priority interrupt works?

What is priority interrupt in computer architecture?

Ans:

It is a system responsible for selecting the priority at which devices generating interrupt signals simultaneously should be serviced by the CPU. High-speed transfer devices are generally given high priority, and slow devices have low priority.

When I/O devices are ready for I/O transfer, they generate an interrupt request signal to the computer.

The CPU receives this signal, suspends the current instructions it is executing, and then moves forward to service that transfer request.

But what if multiple devices generate interrupts simultaneously. In that case, we have a way to decide which interrupt is to be serviced first.

In other words, we have to set a priority among all the devices for systemic interrupt servicing. The concept of defining the priority among devices so as to know which one is to be serviced first in case of simultaneous requests is called a priority interrupt system. This could be done with either software or hardware methods.

SOFTWARE METHOD – POLLING

The major disadvantage of this method is that it is quite slow. To overcome this, we can use hardware solution, one of which involves connecting the devices in series. This is called Daisy-chaining method.

HARDWARE METHOD – DAISY CHAINING:

The Daisy–Chaining method of establishing priority on interrupt sources uses the hardware i.e., it is the hardware means of establishing priority.

In this method, all the device, whether they are interrupt sources or not, connected in a serial manner. Means the device with highest priority is placed in the first position, which is followed by lowest priority device.And all device share a common interrupt request line, and the interrupt acknowledge line is daisy chained through the modules.

Q. Explain Interrupts Handling mechanism in Computer system Architecture.

Ans:

Interrupts in Computer Architecture

An interrupt in computer architecture is a signal that requests the processor to suspend its current execution and service the occurred interrupt.

To service the interrupt the processor executes the corresponding interrupt service routine (ISR).

After the execution of the interrupt service routine, the processor resumes the execution of the suspended program.

Interrupts can be of two types of hardware interrupts and software interrupts.

Types of Interrupts in Computer Architecture

The interrupts can be various type but they are basically classified into hardware interrupts and software interrupts.

1. Hardware Interrupts

If a processor receives the interrupt request from an external I/O device it is termed as a hardware interrupt. Hardware interrupts are further divided into maskable and non-maskable interrupt.

- Maskable Interrupt: The hardware interrupt that can be ignored or delayed for some time if the processor is executing a program with higher priority are termed as maskable interrupts.

- Non-Maskable Interrupt: The hardware interrupts that can neither be ignored nor delayed and must immediately be serviced by the processor are termed as non-maskeable interrupts.

2. Software Interrupts

The software interrupts are the interrupts that occur when a condition is met or a system call occurs.

Interrupt Cycle

A normal instruction cycle starts with the instruction fetch and execute. But, to accommodate the occurrence of the interrupts while normal processing of the instructions, the interrupt cycle is added to the normal instruction cycle as shown in the figure below.

After the execution of the current instruction, the processor verifies the interrupt signal to check whether any interrupt is pending. If no interrupt is pending then the processor proceeds to fetch the next instruction in the sequence.

******************************************************************************

For Any Query Contact:

~pradeep

Educational Purpose Only: The information provided on this blog is for general informational and educational purposes only. All content, including text, graphics, images, and other material contained on this blog, is intended to be a resource for learning and should not be considered as professional advice.

No Professional Advice: The content on this blog does not constitute professional advice, and you should not rely on it as a substitute for professional consultation, diagnosis, or treatment. Always seek the advice of a qualified professional with any questions you may have regarding a specific issue.

Accuracy of Information: While I strive to provide accurate and up-to-date information, I make no representations or warranties of any kind, express or implied, about the completeness, accuracy, reliability, suitability, or availability with respect to the blog or the information, products, services, or related graphics contained on the blog for any purpose. Any reliance you place on such information is therefore strictly at your own risk.

External Links: This blog may contain links to external websites that are not provided or maintained by or in any way affiliated with me. Please note that I do not guarantee the accuracy, relevance, timeliness, or completeness of any information on these external websites.

Personal Responsibility: Readers of this blog are encouraged to do their own research and consult with a professional before making any decisions based on the information provided. I am not responsible for any loss, injury, or damage that may result from the use of the information contained on this blog.

Contact: If you have any questions or concerns regarding this disclaimer, please feel free to contact me at my email: pradeep14335@gmail.com